VR-Based Control of Multi-Copter Operation

Immersive Third-Person Teleoperation with Real-Time 3D Reconstruction

Project Overview

Figure 1: Operator wearing Meta Quest 3 headset for immersive drone teleoperation, experiencing third-person view with real-time 3D environmental reconstruction.

We present a VR-based teleoperation system for multirotor flight that renders a third-person view (TPV) of the vehicle with live 3D reconstruction of its surroundings. This addresses fundamental limitations of traditional methods: direct line-of-sight restricts range, while first-person video (FPV) limits situational awareness to the camera's field of view.

The system runs on NVIDIA Jetson Orin NX with ROS2-WebXR integration, streaming geometry and video to a Meta Quest 3 headset for control in unmapped spaces. The third-person perspective provides superior spatial awareness for safe navigation in complex environments.

Experimental validation demonstrated TPV achieved comparable task times to FPV while improving obstacle awareness (+0.20m minimum distance) and eliminating contacts. TPV preserves control quality while exposing hazards invisible in FPV.

Methodology

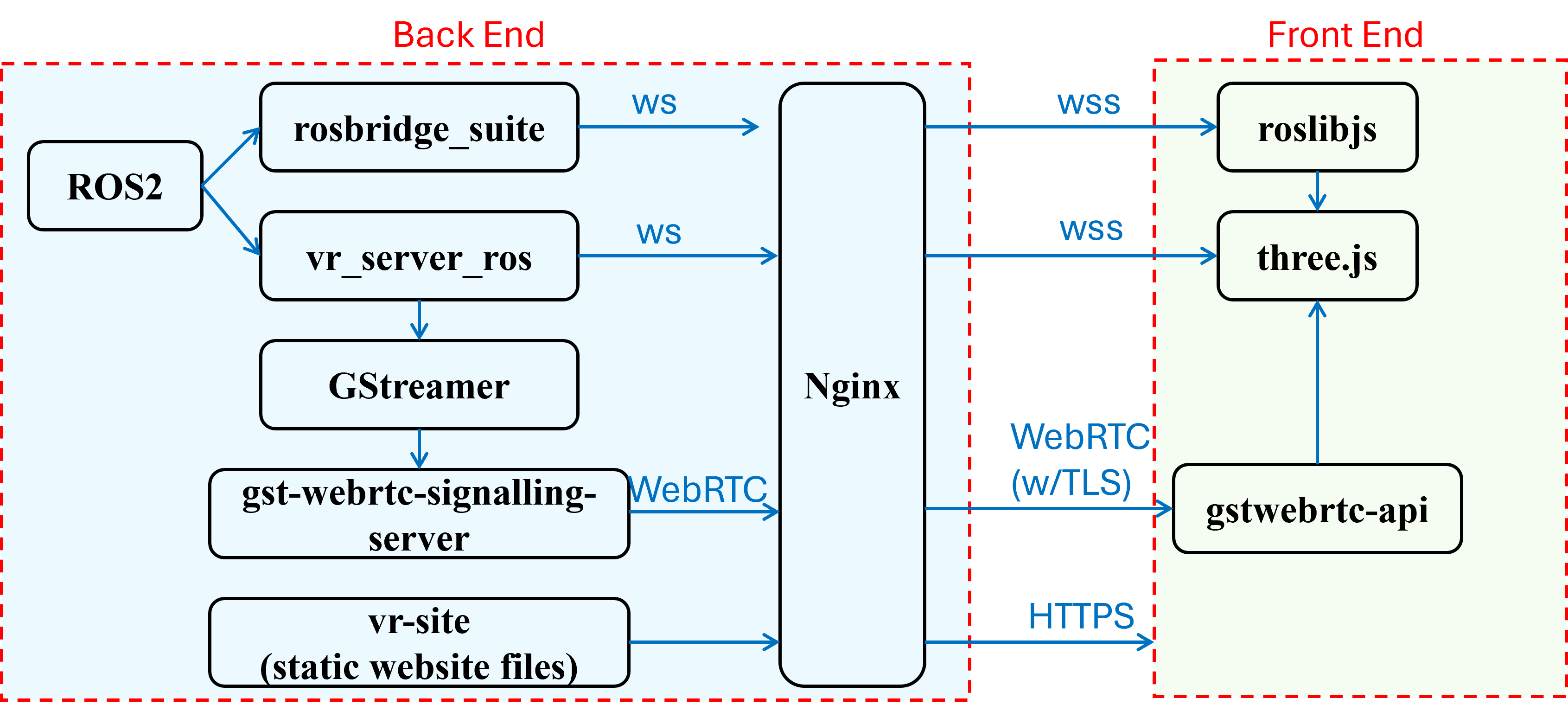

System Architecture

The VR teleoperation system integrates multiple cutting-edge technologies to enable immersive, real-time drone control. The architecture consists of three main components working in concert:

- Hexacopter Platform: Custom-built hexacopter equipped with NVIDIA Jetson Orin NX (1024-core Ampere GPU, 8-core ARM Cortex-A78AE CPU, 16GB LPDDR5 memory) and StereoLabs ZED X Mini Camera (600p at 60 FPS, 110° H × 80° V FOV, 0.1m-8m depth range)

- Real-Time SLAM: ZED SDK performs simultaneous localization and mapping, generating live 3D mesh reconstruction of the environment without requiring prior maps

- VR Interface: Meta Quest 3 headset renders the virtual environment using WebXR and Three.js, with ROS2 communication via rosbridge_server for low-latency control

- Control System: Cascaded position and attitude controllers with Pixhawk 6C mini flight controller, Vicon motion capture for high-precision pose estimation

Third-Person View Implementation

The virtual environment uses the coordinate system created by the ZED camera, with the multi-copter's starting position as the origin. Before rendering, this coordinate system is shifted 5 meters in front of the pilot, allowing them to see the drone and environment as if standing in front of it rather than being co-located. This third-person perspective provides:

- Enhanced Spatial Awareness: Operators can see the drone's position relative to obstacles from all directions, not just the camera's forward view

- Lateral and Rear Hazard Detection: TPV reveals obstacles that would be invisible in traditional FPV systems

- Improved Depth Perception: Stereoscopic 3D reconstruction provides accurate distance cues for safer navigation

Low-Latency Communication Pipeline

The system employs three optimized communication channels to minimize latency:

- ROS2 Communication: Control signals from VR controllers and tracking data transmitted via rosbridge suite

- Custom Binary Mesh Format: 3D mesh data sent via WebSocket in optimized binary format to minimize serialization overhead

- WebRTC Video Stream: Low-latency, low-bandwidth video streaming for real-time visual feedback

Post-optimization latency measurements achieved: median 16.1ms, mean 29.8ms, P95 86.0ms, with only 3.16% of frames exceeding 100ms—well below the 54ms median perception threshold for real-time interaction.

System Components

Figure 2: System architecture for VR-based perception and control. The hexacopter equipped with NVIDIA Jetson Orin NX and ZED camera performs real-time SLAM, streaming 3D mesh geometry and video to the Meta Quest 3 headset via ROS2-WebXR integration. The operator controls the drone using VR controllers with low-latency feedback.

Figure 3: The hexacopter platform featuring custom 3D-printed mounts for the NVIDIA Jetson Orin NX and StereoLabs ZED X Mini Camera. The system is powered by a 4-cell LiPo battery with voltage regulation, and uses Pixhawk 6C mini flight controller with Vicon motion capture for precise pose estimation.

Experimental Results

Experimental Protocol

Two trained pilots completed matched trials comparing TPV (third-person VR with live mapping) against an FPV baseline (video-only). Each pilot executed a standardized protocol: takeoff → approach a wall and stop at commanded standoff distance → return to starting position → land safely. Quantitative analysis reports repeated trials from one pilot (N=8) to control within-subject variability.

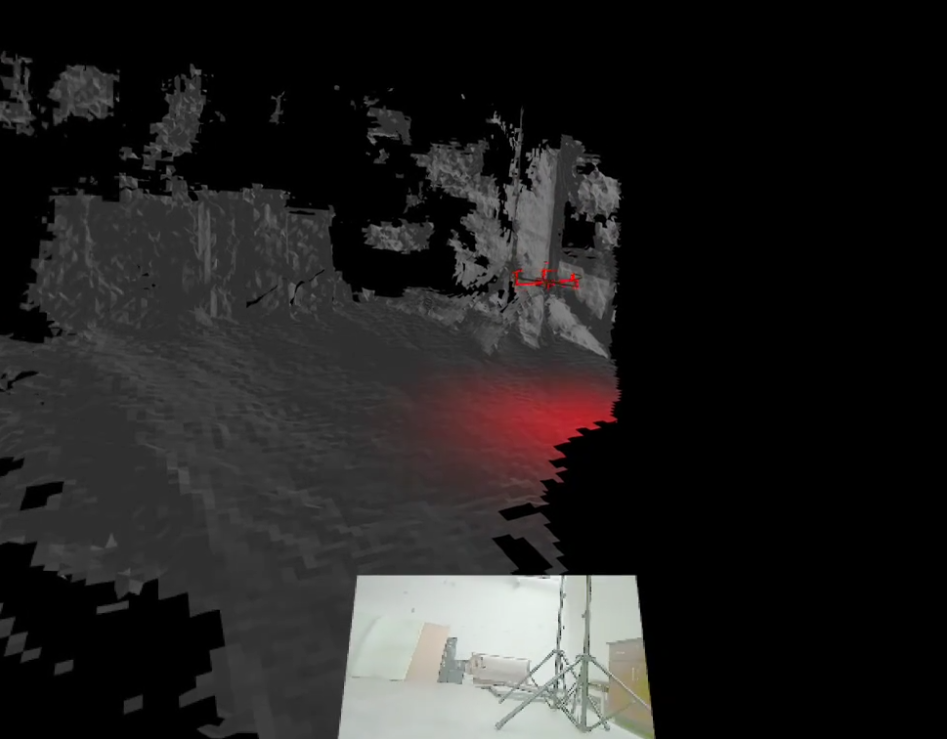

Figure 4: Screenshot of the VR interface showing the partially reconstructed environment, hexacopter model, and live video stream. The real-time 3D mesh provides spatial context while the video feed offers detailed visual information, combining the strengths of both modalities for enhanced situational awareness.

Performance Comparison: TPV vs FPV

The experimental results demonstrate that third-person view teleoperation provides significant safety benefits while maintaining comparable task performance:

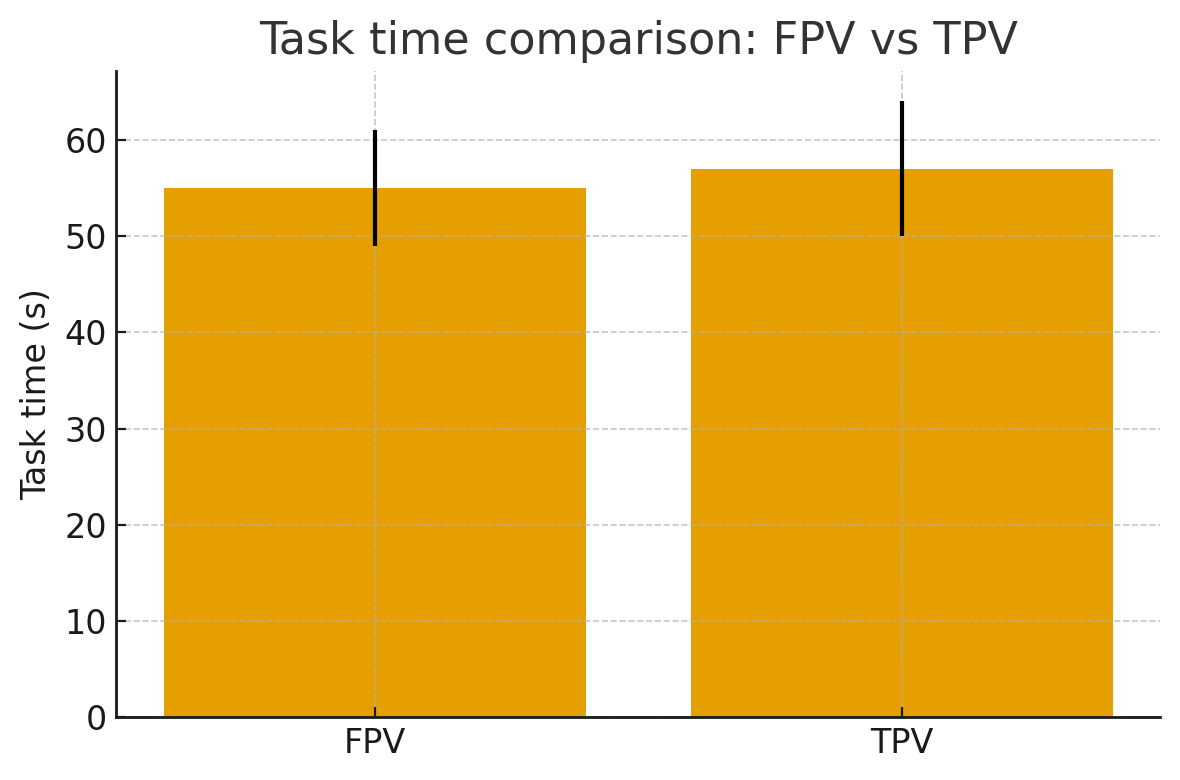

Figure 5a: Task completion time comparison. FPV: 55±6 seconds, TPV: 57±7 seconds. The results show that TPV achieves comparable task times to FPV, indicating that the enhanced spatial awareness does not come at the cost of operational efficiency.

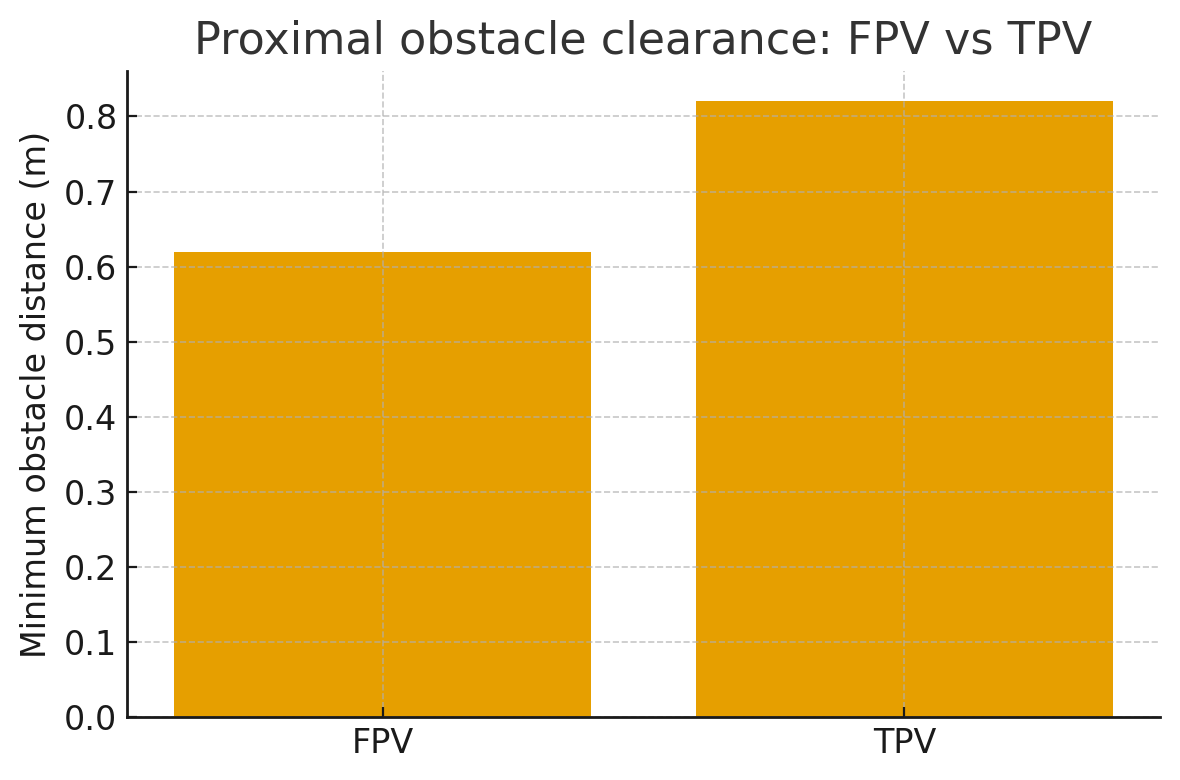

Figure 5b: Minimum obstacle distance metrics. FPV: 0.62m, TPV: 0.82m (+0.20m improvement). TPV significantly improved proximal obstacle awareness, allowing pilots to maintain safer distances from obstacles while navigating the environment. Additionally, TPV reduced contacts (FPV: 1/4 trials, TPV: 0/4 trials).

Key Findings

- Comparable Task Performance: TPV achieved task completion times within 4% of FPV baseline (55±6s vs 57±7s)

- Improved Safety Margins: Minimum obstacle distance increased by 32% (+0.20m), from 0.62m to 0.82m

- Reduced Collision Risk: Zero contacts in TPV trials (0/4) compared to FPV (1/4)

- Real-Time Performance: Post-optimization latency median of 16.1ms enables responsive control

- Enhanced Situational Awareness: TPV exposes lateral and rear hazards invisible in FPV, supporting safer teleoperation in unknown environments

- Path Efficiency: Path length and velocity smoothness differed by less than 5% between conditions

Technical Contributions

Immersive VR Teleoperation

First VR-based drone control system integrating real-time 3D reconstruction with third-person perspective for enhanced spatial awareness

Real-Time SLAM Integration

Live environment mapping without prior knowledge, enabling safe navigation in previously unmapped spaces

Low-Latency Architecture

Optimized ROS2-WebXR pipeline achieving 16.1ms median latency for responsive real-time control

Enhanced Safety

Demonstrated 32% improvement in obstacle clearance and zero collisions through superior hazard visibility

Publication

VR-Based Control of Multi-Copter Operation

arXiv preprint arXiv:2505.22599 - 2025

Robotics (cs.RO), Human-Robot Interaction, Virtual Reality, Teleoperation